|

I am an Assistant Professor at the School of Interactive Computing at Georgia Tech and a Researcher at NVIDIA AI. I work at the intersection of Robotics and Machine Learning. I received my Ph.D. in CS from Stanford University advised by Fei-Fei Li and Silvio Savarese (2015-2021) and B.S. from Columbia University (SEAS'15). I've spent time at DeepMind UK (2019), ZOOX (2017), Autodesk Research (2016), CMU RI (2014), and Columbia Robotics Lab (2013-2015). Our lab is hiring a postdoctoral researcher! Please contact me directly via email if you are interested.Research opportunities: Please fill out this questionnaire and email me once submitted. Email / Google Scholar / CV (Sep 2024) / Github / Twitter |

|

|

I direct the Robot Learning and Reasoning Lab (RL2). We aim to build general-purpose and adaptable "brains" for robots in home, factory, healthcare, and search & rescue missions alike. Our work focuses on endowing robots with both flexible high-level planning abilities ("what to do next") and robust low-level sensorimotor control ("how to do it"). The research draws equally from Robotics and Machine Learning, with the following themes:

|

|

|

|

|

|

|

|

Simar Kareer, Dhruv Patel, Ryan Punamiya, Pranay Mathur, Shuo Cheng, Chen Wang, Judy Hoffman, Danfei Xu In Submission Robot Learning from Egocentric Human Data |

|

Shuo Cheng, Caelan Garrett, Ajay Mandlekar, Danfei Xu CoRL 2024 Solving multi-step manipulation problems with zero-shot generalization. |

|

Kelin Yu, Yunhai Han, Qixian Wang, Vaibhav Saxena, Danfei Xu, Ye Zhao CoRL 2024 Learning tactile control policies from human hand demonstrations. |

|

Utkarsh Mishra, Yongxin Chen, Danfei Xu CoRL 2024 A compositional generative model that can plan for long-horizon, complex coordinated manipulation tasks. |

|

Matthew Bronars, Shuo Cheng, Danfei Xu IEEE RA-L 2024 Learning to generate legible robot motion (motion with expressive intent) with Conditional Diffusion Models. |

|

Shangjie Xue, Jesse Dill, Pranay Mathur, Frank Dellaert, Panagiotis Tsiotras, Danfei Xu CVPR 2024 A principled Bayesian framework to model uncertainty in NeRF for active mapping. |

|

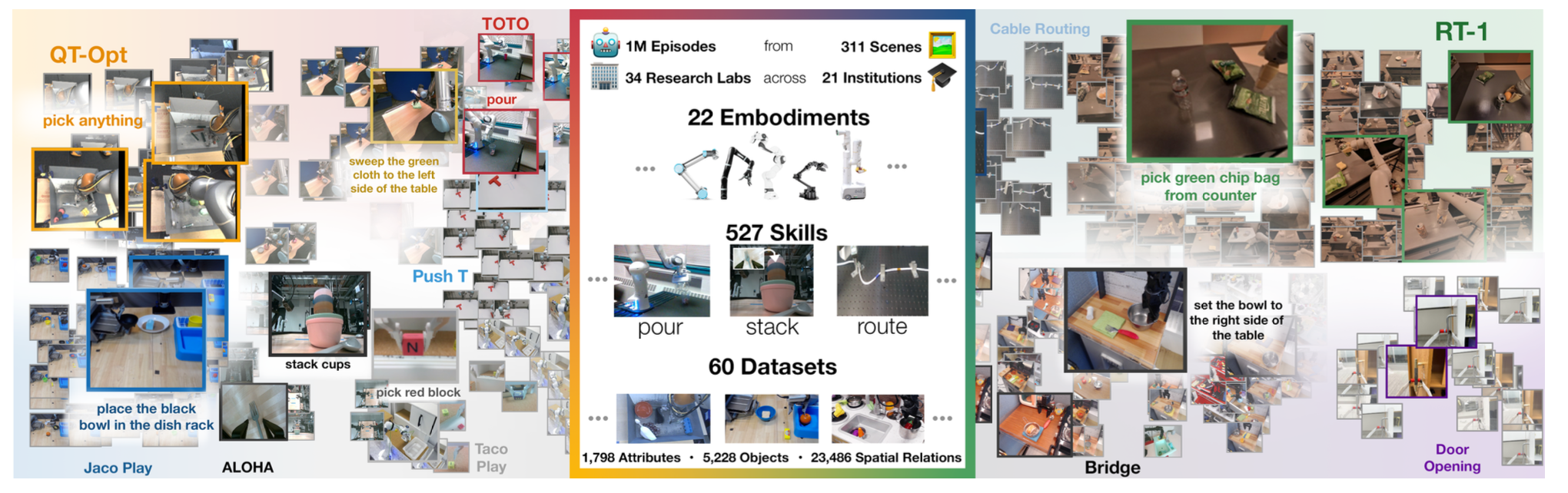

Many Author ICRA 2024, Best Paper Award |

|

Jiawei Yang, Boris Ivanovic, Or Litany, Xinshuo Weng, Seung Wook Kim, Boyi Li, Tong Che, Danfei Xu, Sanja Fidler, Marco Pavone, Yue Wang ICLR 2024 Unsupervised 4D representation learning from videos. |

|

Vaibhav Saxena, Yotto Koga, Danfei Xu TMLR 2024 High-precision manipulation with generative action modeling. |

|

Utkarsh Mishra, Shangjie Xue, Yongxin Chen, Danfei Xu CoRL 2023 A new algorithm to chain together skill-level diffusion models to solve long-horizon manipulation tasks. |

|

Shangjie Xue, Shuo Cheng, Pujith Kachana, Danfei Xu CoRL 2023 ICRA'23 Workshop on Representing and Manipulating Deformable Objects (Best Paper finalist) A new field-based representation (occupancy density) to model and optimize granular object manipulation. |

|

Sachit Kuhar, Shuo Cheng, Shivang Chopra, Matthew Bronars, Danfei Xu CoRL 2023 An offline imitation learning algorithm to learn from mixed-quality human demonstrations. |

|

Ajay Mandlekar*, Caelan Garret*, Danfei Xu, Dieter Fox CoRL 2023 We present HITL-TAMP, a system that merges human teleoperation with automated TAMP to efficiently train robots for complex tasks. |

|

Ziyuan Zhong, Davis Rempe, Yuxiao Chen, Boris Ivanovic, Yulong Cao, Danfei Xu, Marco Pavone, Baishakhi Ray CoRL 2023 (Oral) GPT4-guided trajectory diffusion model. |

|

Chen Wang, Linxi Fan, Jiankai Sun, Ruohan Zhang, Li Fei-Fei, Danfei Xu, Yuke Zhu, Anima Anandkumar CoRL 2023 (Best Paper and Best Systems Paper Finalist) We leverage human video data to train a planner to guide robust low-level task execution. |

|

Shuo Cheng, Danfei Xu Robotics and Automation Letters (RA-L) 2023 Best Paper Honorable Mention We use Task and Motion Planning (TAMP) as guidance for learning generalizable and composable sensorimotor skills. |

|

Ishika Singh, Valts Blukis, Arsalan Mousavian, Ankit Goyal, Danfei Xu, Jonathan Tremblay, Dieter Fox, Jesse Thomason, Animesh Garg ICRA 2023 We harness the power of large language model to generate program-like task plans. |

|

Danfei Xu*, Yuxiao Chen*, Boris Ivanovic, Marco Pavone ICRA 2023 Hierarchical imitation for generating diverse, realistic, and robust traffic behaviors. |

|

Ziyuan Zhong, Davis Rempe, Danfei Xu, Yuxiao Chen, Sushant Veer, Tong Che, Baishakhi Ray, Marco Pavone ICRA 2023 Learn behavior prior through diffusion-based imitation. Sample desired behaviors by conditional diffusion and Signal-Temporal Logic (STL) rules. |

|

Yulong Cao, Danfei Xu, Xinshuo Weng, Zhuoqing Mao, Anima Anandkumar, Chaowei Xiao, Marco Pavone CoRL 2022 (Oral) Defending against attacks that target generative behavior models. |

|

Yulong Cao, Chaowei Xiao, Anima Anandkumar, Danfei Xu, Marco Pavone ECCV 2022 Physically-plausible attack on behavior forecasting models. |

|

Chen Wang, Danfei Xu, Li Fei-Fei Robotics and Automation Letters (RA-L) 2022 Building generalizable world models for planning with unseen objects and environments. |

|

Ajay Mandlekar, Danfei Xu, Josiah Wong, Soroush Nasiriany, Chen Wang, Rohun Kulkarni, Li Fei-Fei, Silvio Savarese, Yuke Zhu, Roberto Martin-Martin CoRL 2021 (Oral) [code+dataset][website][blogpost] A large-scale study on learning manipulation skills from human demonstrations. |

|

Ajay Mandlekar, Danfei Xu, Roberto Martin-Martin, Yuke Zhu, Li Fei-Fei, Silvio Savarese Arxiv Preprint, 2021 Human-in-the-loop learning for complex manipulation tasks. |

|

Chen Wang, Claudia D'Arpino, Danfei Xu, Li Fei-Fei, Karen Liu, Silvio Savarese CoRL 2021 Learning human-robot collaboration policies from human-human collaboration demonstrations. |

|

Chen Wang*, Rui Wang*, Ajay Mandlekar, Li Fei-Fei, Silvio Savarese, Danfei Xu IROS 2021 An learnable action space for recovering human's hand-eye coordination behaviors by learning from human demonstrations. |

|

Danfei Xu, Ajay Mandlekar, Roberto Martin-Martin, Yuke Zhu, Silvio Savarese, Li Fei-Fei (Long version) ICRA 2021 (Short version) Oral Presentation, NeurIPS Workshop on Object Representations for Learning and Reasoning, 2020 We extend the classical definition of affordance to enable generalizable long-horizon planning. |

|

Danfei Xu, Misha Denil (Long version) CoRL 2020 (Short version) Late-Breaking Paper, NeurIPS Deep Reinforcement Learning Workshop 2019 An algorithm framework that simultaneously addresses the reward delusion problem in supervised reward learning and the overfitting discriminator problem in adversarial imitation learning. |

|

Chien-Yi Chang, De-An Huang, Danfei Xu, Ehsan Adeli, Li Fei-Fei Juan Carlos Niebles ECCV, 2020 Learning to plan from instructional videos. |

|

Ajay Mandlekar*, Danfei Xu*, Roberto Martin-Martin, Silvio Savarese, Li Fei-Fei RSS, 2020 Learning visuomotor policies that can generalize across long-horizon tasks by modeling latent compositional structures. |

|

Chen Wang, Roberto Martin-Martin, Danfei Xu, Jun Lv, Cewu Lu, Li Fei-Fei, Silvio Savarese, Yuke Zhu ICRA, 2020 Real-time category-level 6D object tracking from RGB-D data. |

|

Danfei Xu, Roberto Martin-Martin, De-An Huang, Yuke Zhu, Silvio Savarese, Li Fei-Fei NeurIPS, 2019 A flexible neural network architecture for learning to plan from video demonstrations. |

|

De-An Huang, Danfei Xu, Yuke Zhu, Silvio Savarese, Li Fei-Fei, Juan Carlos Niebles IROS, 2019 One-shot imitation learning via hybrid neural-symbolic planning. |

|

William B. Shen, Danfei Xu, Yuke Zhu, Leonidas Guibas, Li Fei-Fei, Silvio Savarese ICCV, 2019 Learning generalizable navigation policy from mid-level visual representations. |

|

Chen Wang, Danfei Xu, Yuke Zhu, Roberto Martin-Martin, Cewu Lu, Li Fei-Fei, Silvio Savarese CVPR, 2019 Dense RGB-depth sensor fusion for 6D object pose estimation. |

|

De-An Huang*, Suraj Nair*, Danfei Xu*, Yuke Zhu, Animesh Garg, Li Fei-Fei, Silvio Savarese, Juan Carlos Niebles CVPR, 2019 (Oral) Generate executable task graphs from video demonstrations. |

|

Danfei Xu*, Suraj Nair*, Yuke Zhu, Julian Gao, Animesh Garg, Li Fei-Fei, Silvio Savarese ICRA, 2018 [website] [video] [Two Minute Papers] [blog post] Neural Task Programming (NTP) is a meta-learning framework that learns to generate robot-executable neural programs from task demonstration video. |

|

Danfei Xu, Ashesh Jain, Dragomir Anguelov CVPR, 2018 End-to-end 3D Bounding Box Estimation via sensor fusion. |

|

Danfei Xu, Yuke Zhu, Christopher B. Choy, Li Fei-Fei CVPR, 2017 We propose an end-to-end model that jointly infers object category, location, and relationships. The model learns to iteratively improve its prediction by passing messages on a scene graph. |

|

Christopher B. Choy, Danfei Xu*, JunYoung Gwak*, Silvio Savarese ECCV, 2016 We propose an end-to-end 3D reconstruction model that unifies single- and multi-view reconstruction. |

|

Yinxiao Li , Yan Wang , Yonghao Yue , Danfei Xu, Michael Case , Shih-Fu Chang , Eitan Grinspun , Peter K. Allen IEEE TASE, 2016 Deformable object manipulation with an application of personal assitive robot. This is the journal paper of our "laundry robot" series: |

|

Danfei Xu, Hernan Badino, Daniel Huber IROS, 2015 Vision-based localization on a probabilistic road network. |

|

Danfei Xu, Gerald E. Loeb, Jeremy Fishel ICRA, 2013 Object classification using multi-modal tactile sensing. |

|

|

|

|