School of computer science

Georgia Institute of Technology

CS4290/CS6290HPCA Fall 2010

Programming assignment #2

Due: (part 1, program): Wednesday, November 3, 6:00 pm, Report: Thursday, Nov 4 before class Friday, October 29, 6:00 pm, report: Tuesday , Nov 2 before class

Hyesoon Kim, Instructor

This is an individual assignment. You can discuss this assignment with other classmates but you should do your assignment individually. Please follow the submission instructions. If you do not follow the submission file names, you will not receive the full credit. Please check the class homepage to see the latest update. Your code must run on killerbee[1-5].cc.gatech.edu with g++4.1 or warp clusters

Overview

This assignment is composed of two parts: Part 1: building the simulator, Part 2: simulation and analysis

Part 1(100 pts): Building a Superscalar processor

In this assignment, you will improve your pipeline design to have (1) branch predictor and (2) Superscalar and (3) Tomasulo's algorithm (register renaming and out of

order scheduling). You will extend your Lab #1 pipeline design. We provide add_me.txt file is provided. Please add the code at appropriate places. userknob.h and simknob.h files are updated. Please download the new files.

(1) Implement a g-share branch predictor

Add a g-share branch predictor in the FE stage. When a processor

fetches a conditional branch instruction (cf_type must be CF_CBR), it

accesses the branch predictor. All other branches, we assume that they

are correctly predicted. There are no pipeline bubbles for other

branches. If a branch is mispredicted, the processor should not fetch

instructions from the correct path. i.e., the processor should not

call the get_op function. Instead, it should stall the pipeline until

the branch instruction is resolved. After the branch is resolved, in

the following cycle the correct PC address is forward to the PC

latch. From the next cycle the processor can start to fetch

instructions from the correct path. Note that in a real hardware, the

processor will keep fetching wrong-path instructions until a branch is

resolved. Once a misprediction is discovered, the processor flushes

the pipeline and starts to fetch instructions from the correct

path. Since we are building a trace-driven simulator, we cannot send

wrong-path instructions into the pipeline. That's why we are stalling

the pipeline to model the time delay between a branch prediction and

branch resolution. To simplify the problem, after a branch is

predicted, you also update the branch predictor including BHR with the

correct value at the same cycle. In a real hardware, the branch

predictor is speculatively updated with predicted values. When a

branch misprediction is detected, the BHR value is recovered. However,

since we do not fetch wrong-path instructions, this step is

unnecessary. The 2bit counters in the PHT table are initialized with

"10" weekly taken.

Note that, in a real hardware, when a branch misprediction is

detected, the processor cannot start to rename until all correct path

instructions are retired. Again, to simplify the simulator, the

processor starts to resume as soon as the correct-path PC value is

available.

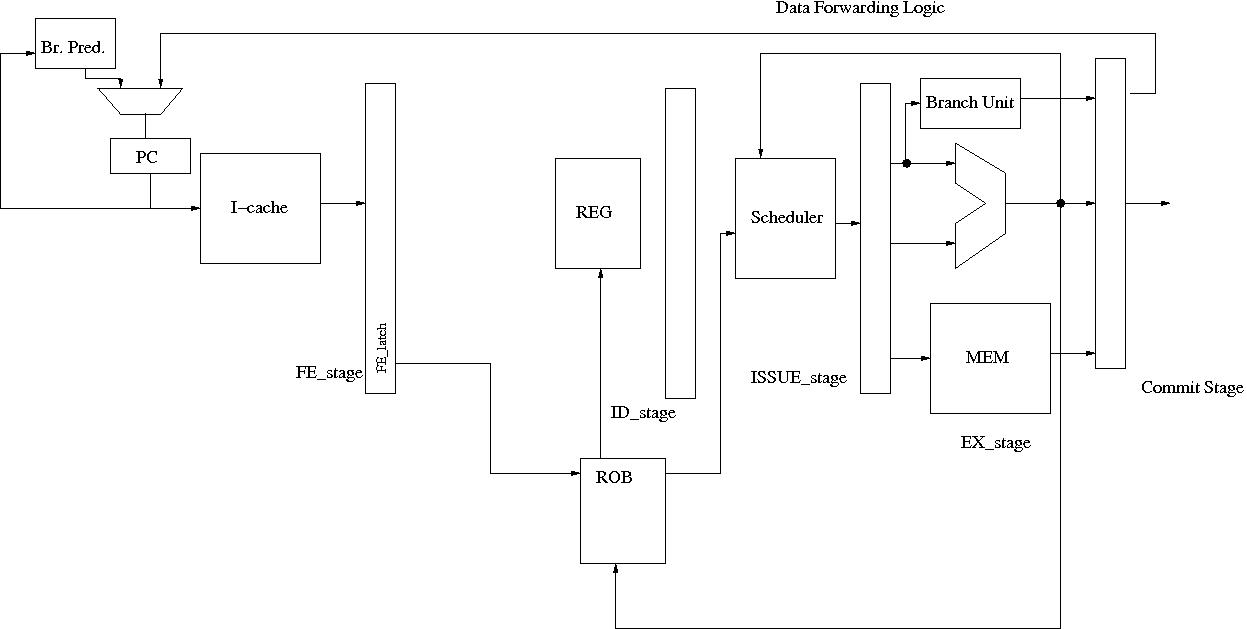

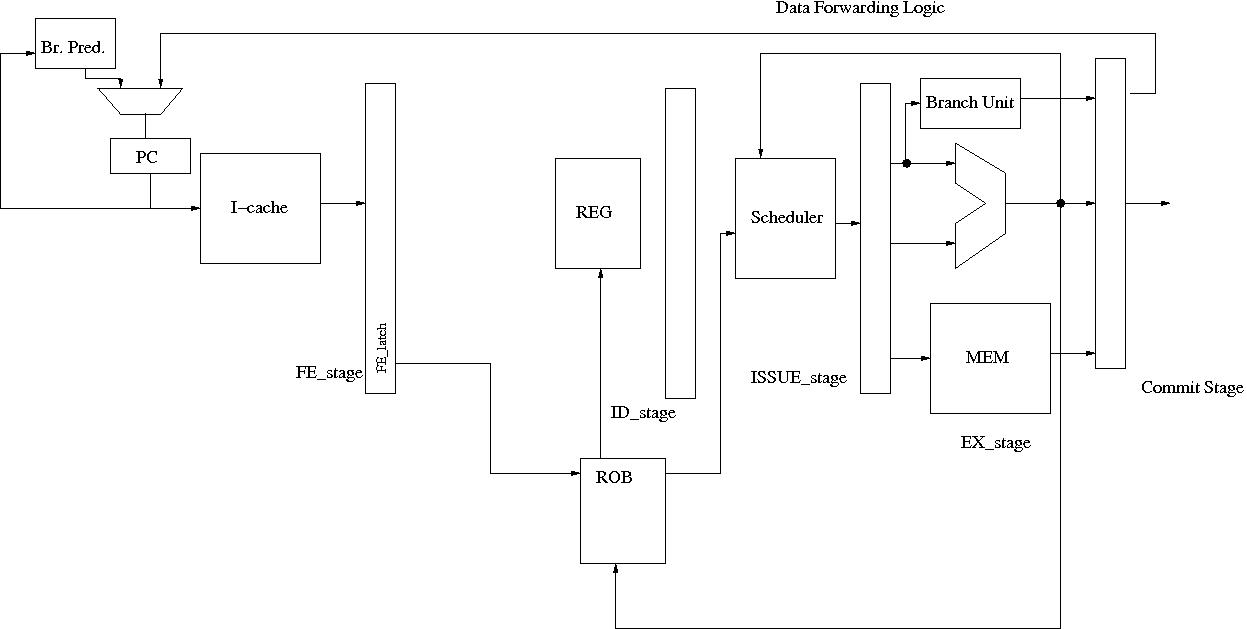

pipeline design

(2) a superscalar processor.

Now you extend your simulator to handle more than one instruction at a

cycle. It can fetch/decode/issue/execute/retire "KNOB_ISSUE_WIDTH" number of

instructions. Fetch, decode, issue, retire must be in order. We assume that

the pipeline has "KNOB_ISSUE_WIDTH" number of functional units. All

functional units are pipelined.

(3) Implementing out of order scheduling algorithm

To support out of order scheduling and in-order commit, now you change

your pipeline stages. Instructions will be renamed and instructions

can be executed out of order. Instructions must be retired in order.

To implement an out of order processor, there is a scheduler. To

support an in-order retirement, a ROB is also required.

In this assignment, you do not need latches other than the Fetch stage.

To reduce unnecessary code debugging, I suggest removing all the pipeline latches

other than FE_latch. You can remove the pipeline latch printf statements.

Pipeline changes:

Instead of FE/ID/EX/MEM/WB stages, now the processor has

FE/ID/ISSUE/EX/WB stages.

The major features of each pipeline are as follows:

FE_stage: it accesses the I-cache and it reads an op (instruction) from a trace file.

It access a branch predictor and predicts the next target address. Only conditional branches use a gshare branch predictor

and all other branches are always correctly predicted. We assume that the processor has a BTB so it knows whether an instruction is a branch or not

at FE_stage. You do not need to model the BTB. The fetched instructions are stored in the FE_stage latch.

ID_stage: Instructions are decoded at the beginning of the ID stage.

Instructions are sent to the ROB here. If there is no space in the ROB,

the processor stalls the FE_stage.

ISSUE_stage: In this stage, the processor inserts instructions

into the scheduler. Instructions access the register file when they

enter the scheduler. Up to KNOB_ISSUE_WIDTH instructions can be sent

to the scheduler. Ready instructions are selected in this stage. The

ready instructions are sent to the EX stage. Only up to

KNOB_ISSUE_WIDTH instructions can be selected. When the scheduler

selects instructions, it also checks the status of functional units.

There are KNOB_ISSUE_WIDTH number of functional units. If there is no

available functional unit, the instruction cannot be scheduled. (since

the number of functional units is the same as KNOB_ISSUE_WIDTH, in

this assignment there will no such a case that the processor cannot

schedule instructions because of not enough functional units.)

KNOB_ISSUE_WIDTH number of memory instructions can access the d-cache.

You must provide the feature of in-order scheduling

EX_stage:

All the functional units are pipelined.

Now, the d-cache is

accessed here. The processor also searches the load/store buffer (you

do not need to implement the load/store buffer in this assignment

since the d-cache is always hit in this assignment.) A branch

instruction is also resolved here. When an instruction finishes

execution, at that moment, it frees the corresponding scheduler entry.

WB_stage: An instruction is retired at this stage. A destination

register is available at this moment. The branch target address is sent to the PC register at this stage.

Hardware structure size

The scheduler size is determined by KNOB_SCHED_SIZE.

Rob size is determined by KNOB_ROB_SIZE.

The number of physical register is the same as KNOB_ROB_SIZE. (so your simulator probably does not need to have a separate physical register file.)

The load and store buffer. We also assume that the number of load/store buffer size is the same is KNOB_ROB_SIZE.

Data forwarding

An instruction becomes eligible for

execution in the cycle that follows the one in which the last operand

was forward from the EX stage. There are KNOB_ISSUE_WIDTH number of data forwarding paths from EX

stage.

KNOBS

New KNOBS

KNOB_ROB_SIZE: it sets the number of entries in the Rob (default value is 64)

KNOB_SCHED_SIZE: it sets the number of entries in the scheduler (default value is 8)

KNOB_OOO_SCHEDULER: It sets the scheduling policy. ( 0: in order scheduling 1: out of order scheduling)

KNOB_GHR_LENGTH: It decides the length of GHR. The number of gshare predictor entry is 2^(KNOB_GHR_LENGTH).

KNOB_DEBUG_PRINT: print a debug message. (0: no debug message 1: print debug message )

KNOB_ISSUE_WIDTH: It sets the number of fetch/decode/issue/schedule/execute/retire width

KNOBS from Assignment #1

KNOB_OUTPUT_FILE: set the output filename of the print_stats function

KNOB_TRACE_NAME: set the input trace file name

KNOB_READ_TRACE: (1): read trace and execute the simulator (0): execute the binary

You should not set 1 for both KNOB_WRITE_TRACE and KNOB_READ_TRACE. Only one of them has to be 1.

KNOB_MAX_SIM_COUNT: set the maximum cycle_count for the simulation

KNOB_MAX_INST_COUNT: set the maximum inst_count for the simulation

KNOB_PRINIT_PIPE_FREQ: set the frequency of calling print_pipeline() function

Grading

We will check branch predictor accuracies as we vary the length of GHR.

We will check IPC values to grade your homework. We will vary issue width.

We will also use the KNOB_DEBUG_PRINT knob to check in-order/OOO scheduling and in-order retirement. You must replace print_stats() function with the new one at add_me.txt file.

Please make it sure your simulation can be ended until the end of traces and remove all debug statements that you added.

Submission Guide

Please do not turn in pzip files(trace files). Trace file sizes are so huge so they will cause a lot of problems.

(Tar the lab2 directory. Gzip the tarfile and submit lab2.tar.gz file at T-square)

cd pin-2.8-36111-gcc.3.4.6-ia32_intel64-linux/source/tools

cd lab2

make clean

rm *.pzip

cd ..

tar cvf lab2.tar lab2

gzip lab2.tar

Please do not let your zombie jobs use all the machine resources!!

Note: Assignment #2 is significantly longer than Assignment #1. Please start early and please read faq before you start.

Part 2 20 pts : Simulation and analysis

Due: 11/2/10 before class. Hard copy only

Using your simulator, you will do performance analysis. You will generate traces which are at least longer than 10,000 instructions. Any applications are fine. Please do not turn in your traces!!

Include your simulation results in the report. The default configurations are

gshare history length: 8

issue width: 2

out of order scheduler

ROB size: 64

- Vary the issue width from 1 to 3 (1, 2 and 3) and discuss performance impacts. Test both in order scheduling and out of order scheduling mechanisms. Discuss how the performance improvements are different.

(Hint: why (IPC (width=2) - IPC(width=1)) >> (IPC(width=3) - IPC(width=2)) ? )

- Write two simple benchmarks that could have different branch prediction accuracies. Differences should be more than 5%. You could use existing benchmarks.

Simple floating point benchmarks such as matrix multiplications, PDE calculations typically have high branch prediction accuracy and complicated benchmarks such as bzip2, the simulator itself would have high branch misprediction rate.

Demonstrate the performance as you vary gshare history length (4,8,12).