- Activity

GPS Location Discovery

GPS Multi-user Analysis

On-body sensors provide a unique insight into the actions and behaviors of their owner. Their "first-person view" from the user's

perspective greatly reduces some of the difficulties of context sensing systems embedded in the environment, such as occlusion, scale,

and interference. In addition, since the sensors travel with the user, they can often record data over a longer interval than systems

based in a given office or home environment. We are interested in discovering, modeling, and recognizing the user's everyday activites

in an effort to create automatic diaries and meaningful indices into these diaries. Such automatic diaries may prove useful in the

treatment of chronic medical conditions or in after action reporting for soldiers, emergency workers, or police. We are also interested in

applying our research to develop contextually-aware, pro-active agents to aid the user in everyday tasks. Such systems could be used as

reminder systems or cognitive aids for people with Alzheimer's Disease or even busy professionals. To date, we have developed systems that

discover meaningful locations and patterns of travel from Global Positioning System (GPS) coordinates; recognized actions performed in

an assembly task in a wood workshop, identified complex procedures (specifically, the Towers of Hanoi) using computer vision, and

investigated identifying indoor location from omnidirectional video and, separately, from infrared beacons.

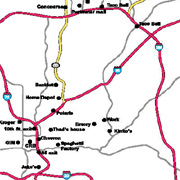

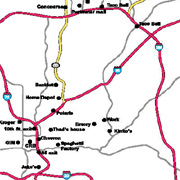

Location is often the focus of context awareness research. Yet raw GPS coordinates or sensor readings are not useful unless they can be

mapped to meaningful activities in the user's life. However, users often do not want to invest the time specifying their own personal

maps and schedules. We have developed a system that clusters GPS coordinates gathered over an extensive period of time into locations

(e.g. a university campus) and sub-locations (e.g. a given building) using unsupervised learning. Once a location is established as being

a common site for the user to visit, the user is asked to name the location, thus tying a semantic meaning to it. The system generates a

predictive model of the wearer's movement (e.g. if the user is at work, they will visit home next with a 60% probability), establishes

routine behaviors for the user (e.g. the user never goes to the grocery store on the way to work but only when traveling from work to

home), and determines when a particular trip is unusual and, therefore, possibly important. In addition to GPS for outdoor

positioning, we have explored recovering location from using on-body omnidirectional cameras, inertial sensors combined with proximity

sensors to detect footfalls and doorways, and self-powered infrared beacons to determine the user's location indoors.

Modeling schedules with GPS provides a relatively coarse sense of context. We are also interesting in modeling activities on a finer

scale. Using the Towers of Hanoi as a sample domain, we have shown that it is possible to monitor relatively complex activities for

consistency even when not all the data on the activity is available. In another experiment we used microphones and accelerometers mounted

on the user's wrists and arms to show that we can recognize various actions (e.g. sawing, drilling, hammering, tightening a vice, opening

a drawer, etc.) required for an assembly task in a wood workshop. Such systems could be developed into cognitive aids to remind the user

of missed steps or important safety procedures. Similar systems might help track activities of daily living for clients in assisted living

communities to provide feedback to caregivers as to when a client may need more or less assistance. In addition, activity sensing systems

might be used to provide diagnostic tools and feedback for the effectiveness of specific medical therapies. We have begun such a

project that attempts to capture self-stimulatory episodes by children with autism to assist behavioral therapists in tuning their treatment

for a given child.

Related Project

Towers of Hanoi

Autism

GPS Location Discovery

Publication

Human Activity Recognition Using Body-Worn Microphones and accelerometers (submitted PAMI, May 2005)

Recognizing Mimicked Autistic Self-Stimulatory Behaviors Using Hidden Markov Models (ISWC 2005)

A Wearable Interface for Topological Mapping and Localization in Indoor Environments (To be submitted to Pervasive 2006)

Recognizing and Discovering Human Actions from On-body Sensor Data (ICME, Amsterdam, The Netherlands, July 2005)  Recognizing Workshop Activity Using Body Worn Microphones and Accelerometers, In Pervasive Computing, 2004

Recognizing Workshop Activity Using Body Worn Microphones and Accelerometers, In Pervasive Computing, 2004  Expectation Grammars: Leveraging High-Level Expectations for Activity RecognitionIn

(Computer Vision and Pattern Recognition Conference 2003, Madison, Wisconsin, June 2003)

Expectation Grammars: Leveraging High-Level Expectations for Activity RecognitionIn

(Computer Vision and Pattern Recognition Conference 2003, Madison, Wisconsin, June 2003)  SoundButton:Design of a Low PowerWearable Audio Classi�cation System (IAWC, White Plains, WA, October 2003)

SoundButton:Design of a Low PowerWearable Audio Classi�cation System (IAWC, White Plains, WA, October 2003)  Using GPS to Learn Significant Locations and Predict Movement Across Multiple Users

(Personal and Ubiquitous Computing, 2003)

Using GPS to Learn Significant Locations and Predict Movement Across Multiple Users

(Personal and Ubiquitous Computing, 2003)  Learning Significant Locations and Predicting User Movement with GPS

(ISWC. Seattle, WA. October 2002)

Learning Significant Locations and Predicting User Movement with GPS

(ISWC. Seattle, WA. October 2002)  Enabling Ad-Hoc Collaboration Through Schedule Learning and Prediction

(CHI Workshop on Mobile Ad-Hoc Collaboration. Minneapolis, MN. April 2002)

Enabling Ad-Hoc Collaboration Through Schedule Learning and Prediction

(CHI Workshop on Mobile Ad-Hoc Collaboration. Minneapolis, MN. April 2002)  Enabling Ad-Hoc Collaboration Through Schedule Learning and Prediction

(CHI Workshop on Mobile Ad-Hoc Collaboration. Minneapolis, MN. April 2002)

Enabling Ad-Hoc Collaboration Through Schedule Learning and Prediction

(CHI Workshop on Mobile Ad-Hoc Collaboration. Minneapolis, MN. April 2002)  Learning visual models of social engagement

(International Workshop on Recognition, Analysis and Tracking of Faces and Gestures in Real-time Systems at ICCV (RATFG-RTS 2001). Van Couver, Canada. 2001)

Learning visual models of social engagement

(International Workshop on Recognition, Analysis and Tracking of Faces and Gestures in Real-time Systems at ICCV (RATFG-RTS 2001). Van Couver, Canada. 2001)  Symbiotic interfaces for wearable face recognition

(Human Computer Interaction International Workshop on Wearable Computing (HCII2001). New Orleans, LA. August 2001)

Symbiotic interfaces for wearable face recognition

(Human Computer Interaction International Workshop on Wearable Computing (HCII2001). New Orleans, LA. August 2001)

Situation Aware Computing with Wearable Computers, In Fundamentals of Wearable Computers and Augmented Reality,

W. Barfield and T. Caudell (editors), Lawrence Erlbaum Press, 2001

Symbiotic interfaces for wearable face recognition.Human Computer Interaction International

(HCII, New Orlearns, LA, August 2001)

Finding location using omnidirectional video on a wearable computing platform

(ISWC. Atlanta, GA. October 2000)  Visual Contextual Awareness in Wearable Computing

(ISWC, Pittsburgh, PA, October 1998)

Visual Contextual Awareness in Wearable Computing

(ISWC, Pittsburgh, PA, October 1998)  The Locust Swarm: An Environmentally-powered, Networkless Location and Messaging System

(ISWC, Cambridge, MA, October 1997)

The Locust Swarm: An Environmentally-powered, Networkless Location and Messaging System

(ISWC, Cambridge, MA, October 1997)

Gesture Pendant

Gesture Pendant Gesture Panel

Gesture Panel Freedigiter

Freedigiter  BlinkI

BlinkI Perceptive Workbench

Perceptive Workbench